For a fleet operator, safety is no longer just a CSR slogan. It’s a line item that shows up in insurance premiums, downtime hours, claims payouts, driver turnover, and brand risk.

Over the past few years, AI dashcams have quietly moved from experimental pilot projects to standard equipment in many leading fleets. The global market is projected to grow from roughly $3.5 billion in 2023 to over $5.2 billion by 2030, driven by a combination of stricter regulations, tighter margins, and the need for real-time operational visibility.

This article looks at AI dashcams from a fleet and technical buyer point of view:

What problems do they actually solve, how do they plug into your existing architecture, and where is the market heading?

Why Fleets Are Investing: From Compliance to Controllable Risk

For modern fleets, the decision to deploy AI dashcams is rarely about gadgets. It’s about managing three core risks:

- Regulatory risk – staying compliant with fast-evolving safety rules.

- Accident & liability risk – reducing the frequency and severity of collisions.

- Operational risk – improving driver behavior and protecting assets in real time.

Regulations as a Direct Business Driver

In Europe, the regulatory environment has become a major catalyst:

- UN R151 – Blind Spot Information System (BSIS): Requires heavy vehicles to detect pedestrians and cyclists in the near-side blind spot. For urban bus and truck fleets, this is no longer optional.

- UN R159 – Moving Off Information System (MOIS): Addresses low-speed collision risks when a truck or bus starts moving from standstill, especially in city centers and depots.

These rules are expected to drive demand for hundreds of thousands of AI-enabled safety systems per year, representing a $10–15 billion accumulated market opportunity over the coming years. For OEMs and larger fleets, “wait and see” is no longer a realistic option; compliance deadlines are already in effect for new type approvals, with full-vehicle requirements following.

Outside Europe, similar trends are emerging:

- China is pushing “Vehicle–Road–Cloud Integration”, encouraging connected, sensor-based safety.

- In the U.S., NHTSA and state-level initiatives are tightening expectations around advanced safety systems and data-driven risk control.

For technical buyers, the takeaway is simple:

- AI dashcams are becoming one of the easiest and most flexible ways to align with current and upcoming safety requirements—especially in mixed or legacy fleets.

What’s Inside: A Practical Look at AI Dashcam Architecture

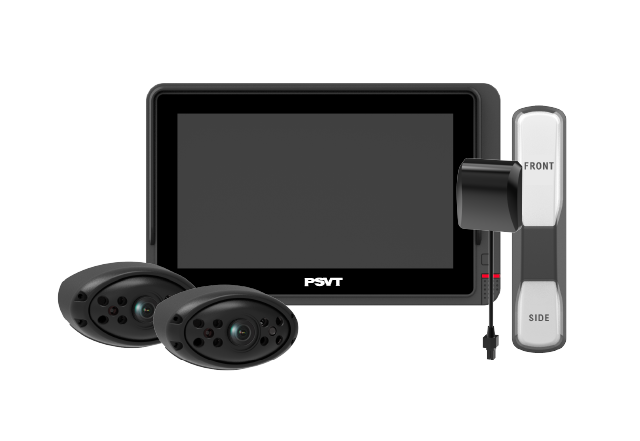

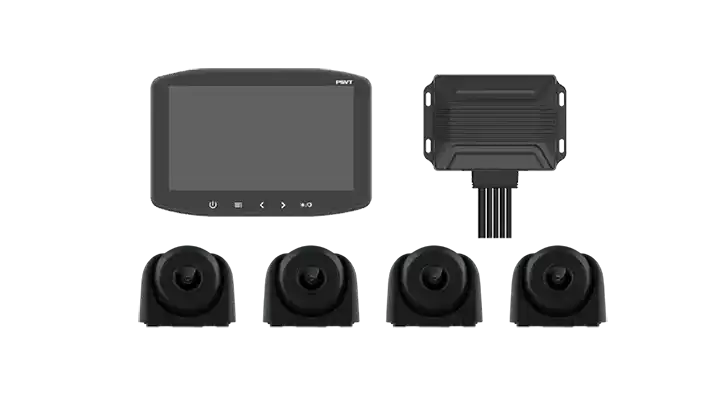

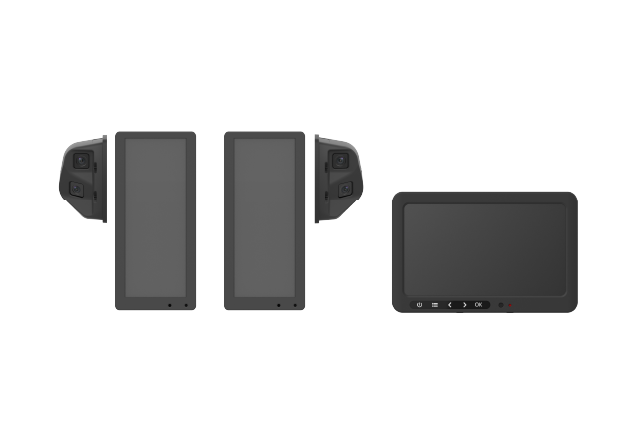

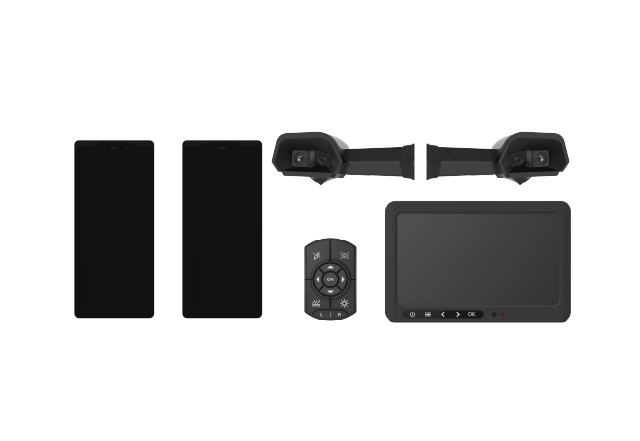

Modern AI dashcams are no longer just “cameras with SD cards.” They are edge computing platforms that combine optics, processing, connectivity, and AI models in a compact form factor.

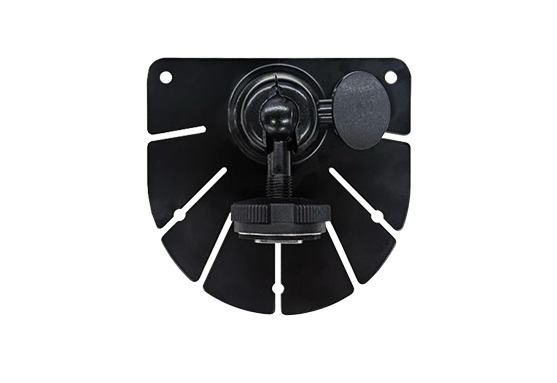

Hardware: Imaging and Compute at the Edge

Typical specs in commercial-vehicle–grade AI dashcams now include:

- High-resolution sensors: 1080p or 2K as standard, with WDR/HDR for tunnel, backlight, and night scenes.

- Low-light / night-vision performance: Ensures consistent detection quality in real-world duty cycles (early morning, night routes, bad weather).

Automotive-grade SoC / NPU:

- - 1–10+ TOPS edge compute to run multiple AI models (DMS, ADAS, BSD) in real time.

- - Built-in hardware encoders for H.264/H.265 video streaming and recording.

This allows the device to analyze and decide locally, without depending on cloud connectivity or high-latency links.

Software: Stack of AI Safety Functions

On the software side, AI dashcams typically integrate three major functional blocks:

DMS – Driver Monitoring System

- Detects fatigue, distraction, phone use, seatbelt violations, etc.

- Uses face, gaze, and head-pose tracking to trigger warnings before high-risk behavior leads to an incident.

- Enables targeted coaching instead of generic “drive safer” campaigns.

- Functions include Forward Collision Warning (FCW), Lane Departure Warning (LDW), headway monitoring, and pedestrian/cyclist detection.

- Works as an always-on “second set of eyes” for the driver, particularly useful in dense traffic and long-haul operations.

- Monitors near-side and rear blind spots, especially around trucks and buses in city environments.

- Aligns with R151 (BSIS) and forms part of R159/MOIS strategies when combined with front-zone detection.

These AI models are optimized to run on the device itself, with only events, clips, and essential metadata pushed to the cloud—significantly reducing bandwidth and storage costs while maintaining a reliable audit trail.

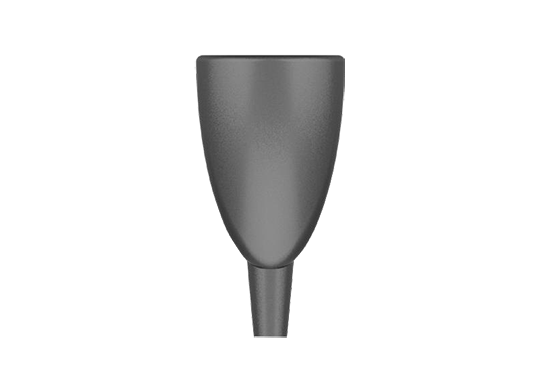

Panic Button and Event Workflows

For control centers and safety managers, features like a dedicated “one-button alarm” are critical:

- Driver presses the button in case of emergency or conflict.

- Live video and vehicle data are immediately transmitted to the backend.

- Dispatchers can coordinate assistance, record incident context, and protect both driver and company from false accusations.

This turns the dashcam into an operational tool, not just a passive recorder.

Integration: How AI Dashcams Fit into Your Existing Stack

For technical buyers, the key question is rarely “What can the device do?” but rather “How cleanly does it integrate?”

Connectivity and Data Flow

Modern AI dashcams typically support:

- Cellular (4G / 5G) for live streaming, OTA updates, and event uploads.

- Wi-Fi / Ethernet options for depot offload or integration with in-vehicle gateways.

- CAN / LIN / digital I/O to receive vehicle status (speed, indicators, braking, gear) and trigger recordings or warnings more intelligently.

This allows AI dashcams to become part of a broader IoT / telematics architecture, feeding data into:

- Fleet management platforms

- Risk scoring engines

- Insurance partners’ systems

- OEM backend systems

Cloud Platform and APIs

Most vendors now offer:

- Web-based dashboards for safety managers and operations staff.

- Role-based access control to separate operational use from HR and legal inquiries.

- Open APIs / webhooks for integration with third-party TMS/FMS platforms.

For fleets already running telematics from another provider, an AI dashcam with flexible APIs can be deployed as a safety add-on rather than a complete platform replacement.

Business Case: Where the ROI Really Comes From

AI dashcams rarely justify themselves on hardware price alone. The ROI comes from downstream impact:

Accident reduction

- Fewer collisions and near-misses.

- Lower repair costs and reduced vehicle downtime.

Liability and claims management

- Clear video and event data reduce “he said, she said” disputes.

- Faster claims processing and better negotiation with insurers.

Insurance premiums and risk-based pricing

- Some insurers offer discounts or improved terms when fleets deploy certified AI safety systems.

- Over time, improved driver scores can be used to negotiate better rates.

Driver performance and retention

- Data-driven coaching programs (based on real events, not subjective complaints).

- Fairer performance assessment: good drivers can prove their professionalism with data.

Operational visibility

- Understanding how, where, and when high-risk behaviors occur allows targeted interventions (routes, schedules, loading patterns, etc.).

For many fleets, a single severe accident avoided or a modest reduction in claim frequency is enough to pay back the system cost across a vehicle’s service life.

What’s Next: From Single Camera to Multi-Modal Perception

We are currently at the “AI dashcam as intelligent sensor” stage. The next steps are already visible:

- Sensor fusion – combining camera data with radar, ultrasonic sensors, and high-precision maps to build a more robust perception layer.

- Domain controllers – shifting from standalone devices to integrated ADAS/Autonomy ECUs where the AI dashcam becomes one of several coordinated inputs.

- Standardized event semantics – aligning how “near misses,” “hard brakes,” and “risky behavior” are defined and shared across OEM, tier-one, and fleet systems.

For fleets and OEMs, the strategic question is no longer “Will we use AI dashcams?” but “Which architecture gives us the flexibility to evolve into this multi-modal, software-defined future without constant rip-and-replace?”

How Technical Buyers Can De-Risk Their Choice

When evaluating AI dashcam vendors, technical and fleet buyers should look beyond spec sheets and ask:

Regulatory alignment:

- Does the solution roadmap explicitly track UN R151/R159, GSR, and local regulations?

- Is there certification or third-party validation?

Model maturity and real-world training:

- Are the AI models trained and validated on commercial vehicle scenarios, not just passenger cars?

- Is there evidence from deployments in similar regions and use cases?

Integration and openness:

- Are there well-documented APIs and integration guides?

- Can it coexist with your current telematics or must everything be replaced?

Lifecycle and support:

- OTA capability, long-term firmware support, and backwards compatibility.

- Regional service and SLA commitments.

Data governance and privacy:

- Clear policies on video retention, access control, and compliance with GDPR or local data rules.

Final Thought: From Project to Platform

For fleets, AI dashcams should not be treated as a one-off gadget project. They’re becoming part of the core safety and data platform of the vehicle.

Done right, an AI dashcam deployment does three things at once:

- Keeps you aligned with tightening regulations.

- Reduces real-world risk and protects drivers and vulnerable road users.

- Creates a structured, analyzable dataset that will power the next generation of optimization and automation.

In other words, the small device on the windshield is no longer just a camera. It’s a strategic sensor node in your future fleet architecture.